Enhancing mobile robot navigation: integrating reactive autonomy through deep learning and fuzzy behavior

Navegación autónoma reactiva de un robot móvil basada en aprendizaje profundo y comportamientos difusos

Barra lateral del artículo

Términos de la licencia (VER)

Esta obra está bajo una licencia internacional Creative Commons Atribución-NoComercial-SinDerivadas 4.0.

Declaración del copyright

Los autores ceden en exclusiva a la Universidad EIA, con facultad de cesión a terceros, todos los derechos de explotación que deriven de los trabajos que sean aceptados para su publicación en la Revista EIA, así como en cualquier producto derivados de la misma y, en particular, los de reproducción, distribución, comunicación pública (incluida la puesta a disposición interactiva) y transformación (incluidas la adaptación, la modificación y, en su caso, la traducción), para todas las modalidades de explotación (a título enunciativo y no limitativo: en formato papel, electrónico, on-line, soporte informático o audiovisual, así como en cualquier otro formato, incluso con finalidad promocional o publicitaria y/o para la realización de productos derivados), para un ámbito territorial mundial y para toda la duración legal de los derechos prevista en el vigente texto difundido de la Ley de Propiedad Intelectual. Esta cesión la realizarán los autores sin derecho a ningún tipo de remuneración o indemnización.

La autorización conferida a la Revista EIA estará vigente a partir de la fecha en que se incluye en el volumen y número respectivo en el Sistema Open Journal Systems de la Revista EIA, así como en las diferentes bases e índices de datos en que se encuentra indexada la publicación.

Todos los contenidos de la Revista EIA, están publicados bajo la Licencia Creative Commons Atribución-NoComercial-NoDerivativa 4.0 Internacional

Licencia

![]()

Esta obra está bajo una Licencia Creative Commons Atribución-NoComercial-NoDerivativa 4.0 Internacional

Contenido principal del artículo

Resumen

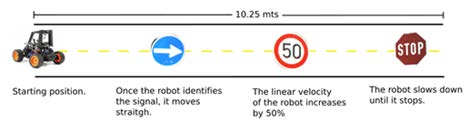

Objective: This study aimed to develop a control architecture for reactive autonomous navigation of a mobile robot by integrating Deep Learning techniques and fuzzy behaviors based on traffic signal recognition. Materials: The research utilized transfer learning with the Inception V3 network as a base for training a neural network to identify traffic signals. The experiments were conducted using a Donkey-Car, an Ackermann-steering-type open-source mobile robot, with inherent computational limitations. Results: The implementation of the transfer learning technique yielded a satisfactory result, achieving a high accuracy of 96.2% in identifying traffic signals. However, challenges were encountered due to delays in frames per second (FPS) during testing tracks, attributed to the Raspberry Pi's limited computational capacity. Conclusions: By combining Deep Learning and fuzzy behaviors, the study demonstrated the effectiveness of the control architecture in enhancing the robot's autonomous navigation capabilities. The integration of pre-trained models and fuzzy logic provided adaptability and responsiveness to dynamic traffic scenarios. Future research could focus on optimizing system parameters and exploring applications in more complex environments to further advance autonomous robotics and artificial intelligence technologies.

Descargas

Detalles del artículo

Referencias (VER)

Afif, M., Ayachi, R., Said, Y., Pissaloux, E., & Atri, M. (2020). Indoor image recognition and classification via deep convolutional neural network. In Proceedings of the 8th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT’18), vol. 1, pp. 364-371. Cham, Switzerland: Springer International Publishing.

Bachute, M., & Subhedar, J. (2021). Autonomous driving architectures: Insights of machine learning and deep learning algorithms. Machine Learning with Applications, vol. 6, 100164. Available at: https://doi.org/10.1016/j.mlwa.2021.100164.

Bengio, Y. (2016). Machines who learn. Scientific American Magazine, vol. 314(6), pp. 46–51. Available at: https://doi.org/10.1038/scientificamerican0616-46.

Bjelonic, M. (2024). Yolo v2 for ROS: Real-time object detection for ROS. Available online: https://github.com/leggedrobotics/darknet_ros/tree/feature/ros_separation (accessed on 17 May 2024).

Blacklock, P. (1986). Standards for programming practices: An alvey project investigates quality certification. Data Processing, vol. 28(10), pp. 522–528. Available at: https://doi.org/10.1016/0011-684X(86)90069-9.

Dahirou, Z., & Zheng, M. (2021). Motion detection and object detection: Yolo (You Only Look Once). In 2021 7th Annual International Conference on Network and Information Systems for Computers (ICNISC), pp. 250-257. New York, USA: IEEE.

DonkeyCar. (2024). How to build a Donkey. Available online: http://docs.donkeycar.com/guide/build_hardware/ (accessed on 17 May 2024).

Itsuka, T., Song, M., & Kawamura, A. (2022). Development of ROS2-TMS: New software platform for informationally structured environment. Robomech J., vol. 9(1). Available at: https://doi.org/10.1186/s40648-021-00216-2.

Kahraman, C., Deveci, M., Boltürk, E., & Türk, S. (2020). Fuzzy controlled humanoid robots: A literature review. Robotics and Autonomous Systems, vol. 134, p. 103643. Available at: https://doi.org/10.1016/j.robot.2020.103643.

Lighthill, J. (1973). Artificial intelligence: A general survey. The Lighthill Report. Available at: http://dx.doi.org/10.1016/0004-3702(74)90016-2.

Lin, H., Han, Y., Cai, W., & Jin, B. (2022). Traffic signal optimization based on fuzzy control and differential evolution algorithm. IEEE Transactions on Intelligent Transportation Systems, vol. 1(4). Available at: https://doi.org/10.59890/ijetr.v1i4.1138.

McCarthy, J., Minsky, M. L., Rochester, N., & Shannon, C. E. (1955). A proposal for the Dartmouth summer research project on artificial intelligence. AI Magazine, vol. 27(4), p. 12. Available at: https://doi.org/10.1609/aimag.v27i4.1904.

Mengoli, D., Tazzari, R., & Marconi, L. (2020). Autonomous robotic platform for precision orchard management:

Architecture and software perspective. In 2020 IEEE International Workshop on Metrology for Agriculture and Forestry, MetroAgriFor, pp. 303-308. New York, USA: IEEE.

Newell, A., Simon, H. A., & Shaw, J. C. (1958). Report on a general problem-solving program. Pittsburgh, Pennsylvania: Carnegie Institute of Technology, pp. 1-27. Available at: http://dx.doi.org/10.1016/0004-3702(74)90016-2.

OTL. (2024). ROS inception v3. GitHub, Inc. Available online: https://github.com/OTL/rostensorflow (accessed on 17 May 2024).

Qian, J., Zhang, L., Huang, Q., Liu, X., Xing, X., & Li, X. (2024). A self-driving solution for resource-constrained autonomous vehicles in parked areas. High-Confidence Computing, vol. 4(1), 100182. Available at: https://doi.org/10.1016/j.hcc.2023.100182.

Redmon, J., Santosh, D., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 779-788. Available at: https://doi.org/10.1109/CVPR.2016.91.

ROS. (2024). Ros rqt_graph. Open Robotics. Available online: http://wiki.ros.org/rqt_graph (accessed on 17 May 2024).

Sharifani, K., & Amini, M. (2023). Machine learning and deep learning: A review of methods and applications. World Information Technology and Engineering Journal, vol. 10(07), pp. 3897-3904. Available at: https://doi.org/10.4028/www.scientific.net/JERA.24.124.

Soori, M., Arezoo, B., & Dastres, R. (2023). Artificial intelligence, machine learning and deep learning in advanced robotics, a review. Cognitive Robotics, vol. 3, pp. 54-70. Available at: https://doi.org/10.1016/j.cogr.2023.04.001.

Stallkamp, J., Schlipsing, M., Salmen, J., & Igel, C. (2012). Man vs. computer: Benchmarking machine learning algorithms for traffic sign recognition. Neural Networks, vol. 32, pp. 323-332. Available at: https://doi.org/10.1016/j.neunet.2012.02.016.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., & Wojna, Z. (2016). Rethinking the inception architecture for computer vision. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 2818–2826. Available at: https://doi.ieeecomputersociety.org/10.1109/CVPR.2016.308.

Transfer learning. (2024). Transfer learning. Available online: https://paperswithcode.com/task/transfer-learning (accessed on 17 May 2024).

Treleaven, P., & Lima, I. (1982). Japan’s fifth generation computer systems. Computer, vol. 15(08), pp. 79–88. Available at: https://doi.org/10.1109/MC.1982.1654113.

Vinolia, A., Kanya, N., & Rajavarman, V. N. (2023). Machine learning and deep learning based intrusion detection in cloud environment: A review. In 2023 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), IEEE, pp. 952-960. Available at: 10.1109/ICSSIT55814.2023.10060868.

PDF

PDF

FLIP

FLIP